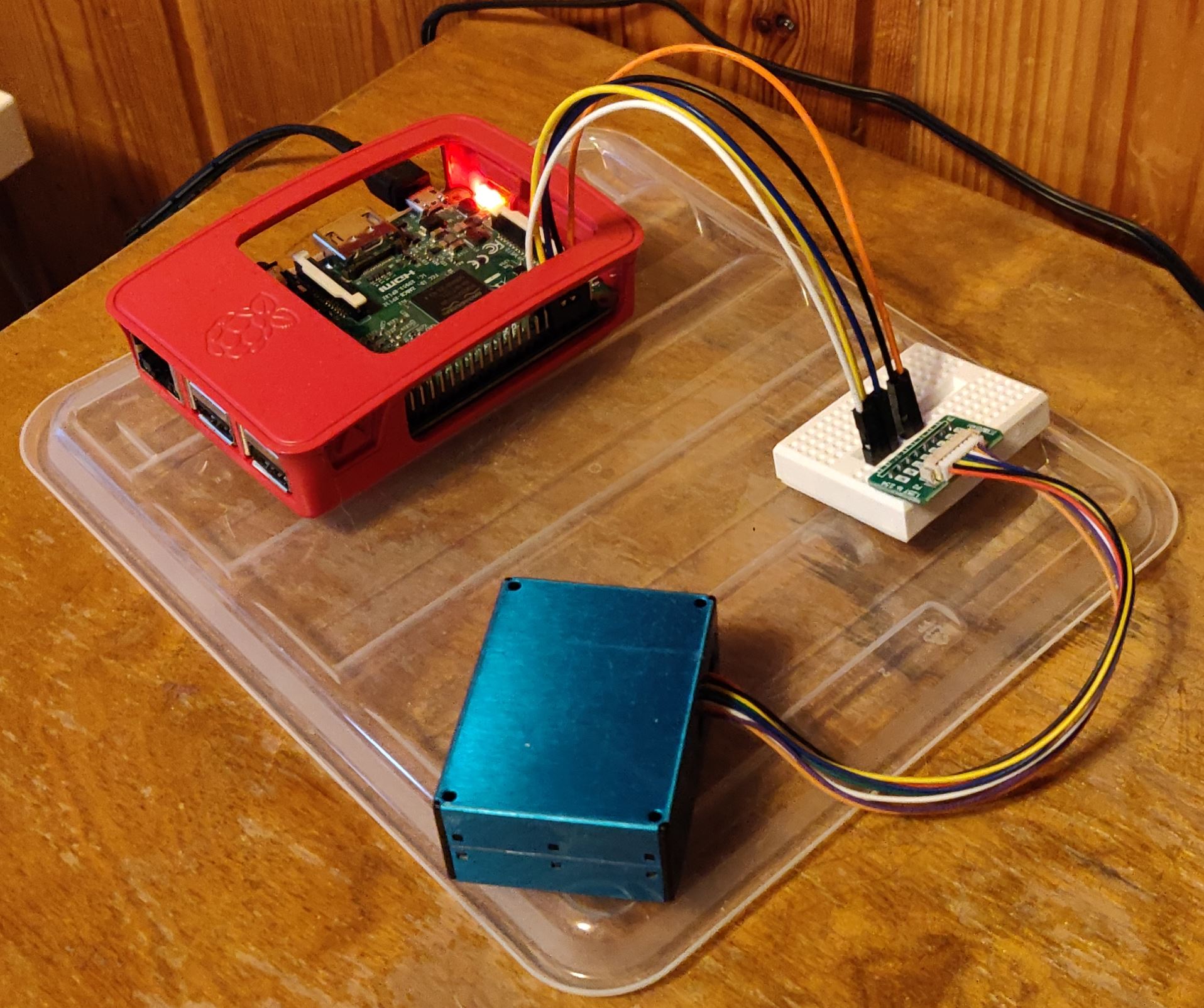

As for next step in my home monitoring set up with Raspberry I’ve obtained Waveshare 4.2 inch e-ink screen to display selected information from my Raspberry easily without fetching phone or tablet to view through those. Primarily I intend to fetch outdoor temperature information and few other informations. As you can see the project is still a bit in “development” at least on casing wise, but on last weekend I managed to get all info to be displayed and updated as intended. I were familiar with e-Ink / e-Paper from using ebook readers and I like about the idea of “when powered off the screen displays what it had and only little power is needed to update the screen”. Of course this screen does not have backlight but it is smaller issue than having 24/7 bright led screen or similars .

For quick background a few years ago I got familiar with Ruuvitag (https://ruuvi.com/) and have couple of these little devices around the house to report temperatures. I placed one of these devices to outdoors (with wide temperature range battery just in case if and when temperatures drop low on winter, https://shop.ruuvi.com/product/ruuvi-cr2477t-wide-temp-battery/).

As previously I wrote another device that I currently have in use is Airthings Wave Plus indoor air quality monitor near my daily working spot.

Information from both of these devices are collected through Bluetooth in Raspberry and displayed in e-ink / e-paper screen (and also uploaded as CSV into Dropbox for storage and further processing if needed).

So how all of this are put together?

RuuviTag part:

At first information from Ruuvitag is collected utilizing libraries and examples from here: https://github.com/ttu/ruuvitag-sensor . I went through experimental Bleson libraries since I like to look solutions with more possible lifespan and due to stability reason. Previously I attempted to get Ruuvitag to work with Bluez and in Raspberry PI 3 b+ but unfortunately this seemed to be pretty unstable combination. The code ran sometimes for hour or couple but then whole Raspberry ended up not responding mode (not even answering to SSH). After spending time with Google I found out that Bluetooth seemed to be little problematic with that specific Raspberry. Luckily I had newer model also to work with and now code has been running nicely. (Raspberry PI 3 b+ did good job at collecting data from Airthings device but this Ruuvitag journey was little bit too much for that).

For Ruuvitag and Bleson enabling I had to do few additional steps (mainly installation from here https://bleson.readthedocs.io/en/latest/installing.html) and some permission adjustments per each step when trying to get examples working. Like always one step at the time and one problem at time for solving. There was decent amount of “head scratching”, but not too much and I should have logged these down to be able write in depth posting. At least one clear issue was with seeing much “End Of File (EOF). Exception style platform” exception from attempting to read Ruuvitag data and I think this was primarily solved with granting permissions for used user account to access bluetooth and some setcap commands from Bleson documentation.

Now I have Ruuvitag’s information collection running every 5 minutes (cron job) and data is stored into to CSV file & uploaded to Dropbox (just to be able to check how cold it has been).

Airthings part:

Information collecting from Airthings is being ran as posted in previous post every 7 minutes (just in case not to fire these two at same time) and data is also saved in CSV file and then uploaded to Dropbox just in case.

Waveshare e-Ink screen:

And now the final part: the screen. I utilized this guide to set up the screen https://medium.com/swlh/create-an-e-paper-display-for-your-raspberry-pi-with-python-2b0de7c8820c and ordered Waveshare 4.2 inch E-Paper module (nice size for my use and cost efficient choice). Another choice would have been e-paper HAT, but I wanted to have GPIO pins easily available for further usage. Waveshare 4.2 e-paper module came with necessary cable to connect the screen with GPIO pins as mentioned in article. With examples from article and Waveshare (https://www.waveshare.com/wiki/4.2inch_e-Paper_Module).

After getting the code working and figuring out what to print into screen and where I set screen updating to happen every 5 minutes on “wake hours” and once in a hour on night time.

During screen updating, the displayed information is being collected from CSV files with idea of “take last line from CSV” and parse needed info from these lines (both devices are reporting a bunch of data and selected information pieces are displayed on the screen).

For next steps I really need to start planning to have database where collect the information. So far it has been very fast way to store data into CSV and display from there, but limitations are coming with this and I have been looking into Influx for visualisations of data that I am collecting. We’ll see where the journey takes me next.